Imagine being able to ask questions about a website in natural language—and receiving meaningful answers instead of simple keyword matches.

In this training session, I’ll introduce Allycat, an open-source, end-to-end stack that enables conversational interaction with website content using Large Language Models (LLMs).

We will be doing the following:

- Crawling and indexing website content

- Cleaning and extracting meaningful information from HTML

- Creating embeddings and storing them in a vector database

- Querying the data using an LLM for contextual, accurate responses

- Run SLMs (Small Language Models) locally using Ollama or LLMs (Large Language Models) on cloud.

- Package the whole stack into a docker container that can be deployed anywhere

TechStack

- Docling to parse HTML (https://github.com/DS4SD/docling)

- vector database like Milvus to store documents (https://milvus.io/)

- llama-index as application framework (https://developers.llamaindex.ai/python/framework/)

- Ollama (https://ollama.com/) to run local models and Nebius AI Studio (https://studio.nebius.com/) to run large models

- LiteLLM (https://docs.litellm.ai/docs/) to seamlessly switch between models

The entire stack is built with Python and open-source components, making it easy to adapt and extend.

Walk away with working code templates, and examples you can immediately adapt to your organization's needs.

You can checkout Allycat here: https://github.com/The-AI-Alliance/allycat

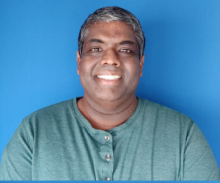

Speaker

Sujee Maniyam

Developer Advocate @Nebius AI, OS Contributor

Sujee Maniyam is a seasoned practitioner focusing on AI, Big Data, Distributed Systems, and Cloud technologies. He combines deep technical expertise with a passion for developer advocacy, empowering developers to build impactful AI-driven applications. Sujee loves sharing knowledge through workshops, talks, and open source. You can find more of his work at https://sujee.dev