Abstract

The future of search is undergoing a revolutionary transformation, shifting from traditional linear queries to a rich multiverse of possibilities powered by AI. The talk explores three critical domains reshaping search experience: multimodal interactions, personalization through large user sequence; and the emerging role of AI agents for application simulation. Through production infrastructure examples and performance metrics across leading LLMs, this session examines the practical implementation challenges and solutions. The discussion concludes with insights into 2025+ market dynamics, including the emergence of agent-first search platforms and the impact of on-device intelligence on traditional search business models.

Speaker

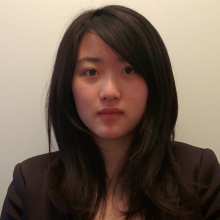

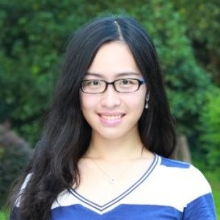

Faye Zhang

Staff Software Engineer @Pinterest, Tech Lead on GenAI Search Traffic Projects, Speaker, Expert in AI/ML with a Strong Background in Large Distributed System

Faye Zhang is a staff AI engineer and tech lead at Pinterest, where she leads Multimodal AI work for search traffic discovery, driving significant user growth globally. She combines expertise in large-scale distributed systems with cutting-edge NLP and AI Agent research pursuits at Stanford. She also volunteers in AI x genomic science for mRNA sequence analysis with work published at multiple science journals. As a recognized thought leader, Faye regularly shares insights at conferences across San Francisco and Paris.